Ever wondered why one university shows up at the top of every list while another with the same course doesn’t even make the top 50? It’s not random. UK university rankings aren’t just opinions-they’re built from real data, collected every year by independent organizations. But the numbers don’t tell the whole story. If you’re trying to pick a university, knowing how these rankings work can save you from making a costly mistake.

Who Creates the UK University Rankings?

Three main players shape how UK universities are seen by students and employers: QS World University Rankings, Times Higher Education (THE), and The Guardian University Guide. Each uses different methods, so a university might be #1 in one list and #25 in another. That’s not a contradiction-it’s a difference in focus.

QS, based in London, looks at global reputation, faculty-to-student ratios, and international student numbers. THE, also UK-based, weighs research output and citations heavily, using data from Elsevier. The Guardian, aimed at undergraduates, focuses on teaching quality, student satisfaction, and career outcomes. None of them are wrong. But they’re not measuring the same thing.

What Data Actually Goes Into the Rankings?

Let’s break down what each ranking looks at, and what it ignores.

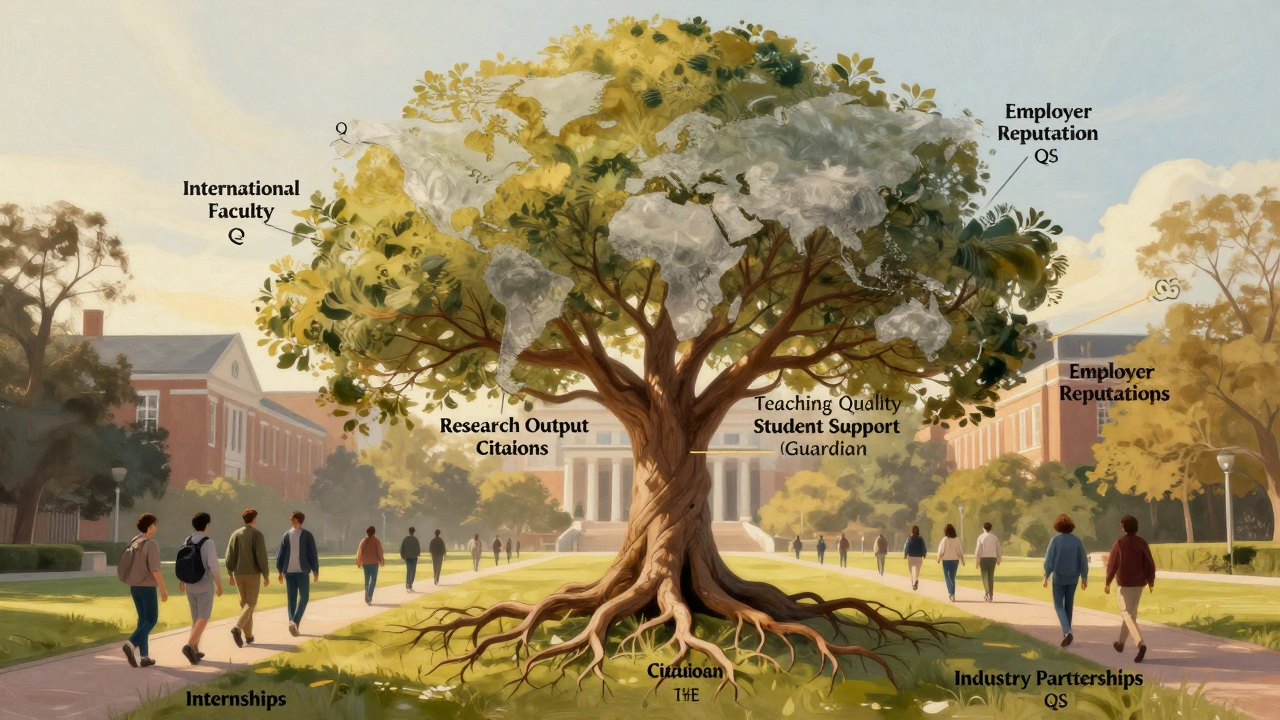

QS World University Rankings uses six metrics:

- Academic reputation (40%) - based on surveys of over 150,000 academics worldwide

- Employer reputation (10%) - feedback from 100,000+ employers

- Faculty-to-student ratio (20%) - how many staff teach each student

- Citations per faculty (20%) - how often research is referenced by others

- International faculty ratio (5%) - percentage of staff from outside the UK

- International student ratio (5%) - percentage of students from abroad

Times Higher Education uses 13 indicators grouped into five areas:

- Teaching (30%) - learning environment, staff qualifications, student-to-staff ratios

- Research (30%) - volume, income, reputation

- Citations (30%) - research influence, measured by citations per paper

- International outlook (7.5%) - staff, students, and research collaboration

- Industry income (2.5%) - research funded by companies

The Guardian University Guide uses eight metrics, all focused on the student experience:

- Student satisfaction (25%) - from the National Student Survey

- Staff-to-student ratio (15%) - how much attention you’ll get

- Academic services spend (10%) - libraries, labs, IT support

- Facilities spend (10%) - sports, housing, social spaces

- Career prospects (15%) - how many graduates are in professional jobs six months after leaving

- Value added (10%) - how much better students do compared to their entry grades

- Student continuity (10%) - how many students stay on and finish

- Degree results (5%) - percentage of Firsts and 2:1s awarded

Notice something? The Guardian doesn’t care about global citations or international faculty. It cares if you’ll be happy, supported, and employed. QS cares if your professor’s paper gets cited in Tokyo. THE wants to know if your university is publishing groundbreaking research. They’re all valid-but they answer different questions.

Why Do Rankings Change Year to Year?

Universities don’t move up or down because they suddenly got better. It’s usually because the data shifted slightly.

In 2024, the University of Edinburgh dropped from 16th to 22nd in THE rankings. Why? A small dip in research income and fewer citations per paper. Not because teaching got worse. Not because students were less happy. Just because the research output numbers changed by a fraction.

Meanwhile, the University of St Andrews climbed in The Guardian’s list because more students reported feeling supported. That’s a real win for undergrads-but it barely moves the needle in QS or THE.

These shifts aren’t about quality. They’re about metrics. A university can be excellent at teaching but low on research output-and still be the best place for you.

What Rankings Don’t Tell You

Rankings leave out a lot. They don’t tell you:

- What the campus actually feels like

- How easy it is to get internships in your field

- Whether your course has industry partnerships

- If the department has professors who actually teach undergrads

- What the local housing market is like

- How supportive the mental health services are

Take Durham University. It’s often ranked lower than Oxford or Cambridge in QS because it doesn’t produce as much high-citation research. But if you’re studying history or theology, Durham’s small-group tutorials and close-knit college system make it one of the most effective places in the UK. That’s not in the numbers.

Or consider the University of the Arts London. It ranks low in THE because it doesn’t focus on STEM research. But if you want to be a fashion designer or animator, it’s one of the top schools in Europe. Rankings don’t reflect that.

How to Use Rankings Wisely

Don’t pick a university based on its rank. Pick it based on what matters to you.

Here’s how:

- Decide what you care about most: teaching quality, job outcomes, campus life, research opportunities?

- Look at the ranking that matches your priority. Use The Guardian for teaching, THE for research, QS for global reputation.

- Check the course-specific data. A university might be average overall but brilliant in your subject. Look at subject rankings, not just overall.

- Read student reviews on Unistats or The Student Room. Real people talk about things rankings ignore.

- Visit if you can. Walk around the campus. Talk to current students. See if the vibe fits.

For example, if you’re studying engineering and want to work in aerospace, check which universities have partnerships with Rolls-Royce or Airbus. That’s more valuable than a high overall rank.

If you’re studying psychology and care about mental health support, look at student satisfaction scores. That’s the Guardian’s strength.

Common Misconceptions

Myth: Higher rank = better education. Not true. A university ranked 40th might have a more personalized program than the one at #10.

Myth: Only Russell Group universities are good. Many non-Russell Group schools, like Loughborough or Bath, rank higher than some Russell Group members in teaching and graduate outcomes.

Myth: Rankings predict future earnings. Your career depends more on your internships, projects, and networking than your university’s name on a list.

Myth: International students should only pick top 10 schools. Many international students end up happier and more successful at mid-ranked schools with strong support services and smaller classes.

Final Thought: Rankings Are a Map, Not the Territory

Think of rankings like a weather forecast. It tells you if it’s likely to rain-but it doesn’t tell you if you’ll enjoy walking in the rain, or if you need an umbrella because you’re going to a job interview. The same applies to university rankings. They give you a direction, but the real decision comes from your goals, your learning style, and what kind of experience you want.

Don’t chase a number. Chase the right fit.

Are UK university rankings reliable?

They’re reliable as tools for comparing specific metrics like research output, student satisfaction, or graduate employment-but not as a measure of overall quality. Each ranking uses different data, so they’re useful when you know what you’re looking for. Don’t treat them as truth. Treat them as clues.

Which ranking should I trust the most?

It depends on your goals. If you want to work in academia or research, trust THE. If you care about student experience and job outcomes, trust The Guardian. If you’re planning to work abroad, QS gives you global recognition. Use all three, but focus on the one that matches your priorities.

Why do some universities drop in rankings even if nothing changed?

Because rankings are relative. If other universities improve their data-even slightly-your school can drop even if it stayed the same. For example, if five universities increase their research citations, the cutoff for top 10 moves up. Your school didn’t get worse. The competition just got stronger.

Do employers care about university rankings?

Some do, especially in finance, law, or consulting, where brand recognition matters. But most employers care more about your skills, internships, projects, and how you present yourself. A strong portfolio or relevant work experience often outweighs a high-ranking university name.

Can a university improve its ranking quickly?

Yes, but only in areas the ranking measures. A university can boost its student satisfaction score by improving support services. It can raise its research ranking by hiring more published academics. But you can’t fake reputation or citations overnight. Real improvement takes years of consistent effort.

Are there any UK universities that are underrated in rankings?

Definitely. Schools like the University of Bath (engineering), Loughborough (sports science), and the University of Sussex (social sciences) often rank below their reputation. They have strong industry links, high graduate employment rates, and excellent teaching-but don’t produce the volume of high-citation research that QS and THE reward.

Next Steps: What to Do Now

Don’t wait for the next ranking to come out. Start by listing your top three priorities: Is it small classes? Career support? Research opportunities? Then go to each ranking website-QS, THE, and The Guardian-and filter by your subject. See where your top choices land in each.

Then, reach out to current students. Ask them: "What’s one thing no ranking mentions that made a difference for you?" You’ll get answers no list can give you.