Every year, millions of students in the UK fill out the National Student Survey (NSS). They rate their courses on things like teaching quality, assessment clarity, and support services. Universities proudly display the results - high scores mean better rankings, more applicants, and more funding. But here’s the real question: do these surveys actually tell you what kind of education you’ll get?

What the NSS Actually Measures

The National Student Survey, run by the Office for Students (OfS), asks final-year undergraduates 27 questions across six categories. These include teaching, learning resources, academic support, organization, assessment, and student voice. The results are turned into percentages - for example, 89% of students at University X agreed their course was intellectually stimulating.

These numbers feed directly into the UK’s official university rankings, like The Guardian and The Times & Sunday Times. They make up 20-30% of the total score. That means a few hundred extra students saying "yes" to one question can push a university up ten spots. But the survey doesn’t measure outcomes like graduate salaries, research impact, or how well students actually learned. It measures how satisfied they felt at the end of their degree.

Why Satisfaction Doesn’t Equal Quality

There’s a big difference between feeling happy and getting a good education. A student might love their course because their lectures are relaxed, deadlines are loose, and exams are easy. That’s satisfying. But it doesn’t mean they’re prepared for a job or grad school. Conversely, a tough course with high expectations might leave students stressed and scoring low on the survey - even if they’re learning more than students at a "higher-ranked" school.

A 2023 analysis by the Higher Education Policy Institute found that universities with lower entry requirements often scored higher on NSS. Why? Because they tend to offer more personalized support, smaller class sizes, and fewer rigid assessments. Meanwhile, elite institutions with high entry grades - like Oxford and Cambridge - often score lower on student satisfaction, not because they’re worse, but because their standards are higher and their workload is heavier.

The Manipulation Problem

Universities know how much the NSS affects their rankings. So they’ve started treating it like a marketing campaign. Some departments run "NSS prep" sessions, telling students what answers will boost their scores. Others offer incentives - free pizza, raffles, even extra credit - to get more students to complete the survey. A 2024 internal report from a Russell Group university admitted that staff were encouraged to "remind students that their feedback helps improve the course," with subtle suggestions to focus on positive responses.

This isn’t fraud. But it’s not neutral either. When you’re nudged to answer a certain way, the data stops reflecting genuine experience. It becomes a performance metric, not a reflection of reality. And when rankings are built on manipulated data, they lose meaning.

Who’s Left Out?

The NSS only surveys final-year undergraduates in England, Wales, and Northern Ireland. It ignores postgraduates, international students, part-time learners, and students on foundation years. That’s a problem. At many universities, over 40% of the student body isn’t included in the survey - yet their experience shapes the campus culture, resources, and even the teaching style.

Take international students. They often face language barriers, cultural isolation, and visa stress. But if they’re not asked, their struggles don’t show up in the rankings. Meanwhile, a university might score highly on "academic support" because it helped UK students navigate the system - but failed to provide adequate language support for non-native speakers. The ranking doesn’t show that.

What Rankings Miss: The Real Indicators of Quality

If you want to know if a university is good, don’t just look at the NSS. Look at:

- Graduate outcomes: What percentage of students are in professional jobs or further study six months after graduation? The Graduate Outcomes Survey (GOS) is more useful than the NSS.

- Student-to-staff ratios: Smaller ratios usually mean more personal attention.

- Research power: How much of the university’s research is rated "world-leading"? That’s published by the REF (Research Excellence Framework).

- Dropout rates: High dropout rates often signal poor support or mismatched expectations.

- Department-specific feedback: Rankings average everything. But a university might have a brilliant engineering department and a terrible art department. Check department reviews on Reddit, The Student Room, or LinkedIn.

For example, the University of Dundee scored lower than the University of Edinburgh on the NSS in 2024. But Dundee’s medical school had a 98% graduate employment rate and one of the lowest dropout rates in the UK. The NSS didn’t capture that.

What Students Should Do Instead

Don’t pick a university based on a ranking that’s mostly built on survey numbers. Do this:

- Find the NSS results for your specific course - not the whole university.

- Read the open-ended comments. What do students actually say? Look for patterns - "lecturers are unresponsive," "labs are outdated," "no career advice."

- Compare with the Graduate Outcomes data. Is the course leading to jobs you want?

- Talk to current students. Join Facebook groups, Reddit threads, or Discord servers for that course.

- Visit the campus. Sit in on a lecture. Ask about support for mental health, disabilities, or international students.

One student told me she chose a university with a 78% NSS score over one with 92% because the lower-ranked school had a mandatory internship program and real industry connections. She got a job before graduation. The other school had high satisfaction scores - but no one could tell her where graduates ended up.

The Bigger Picture

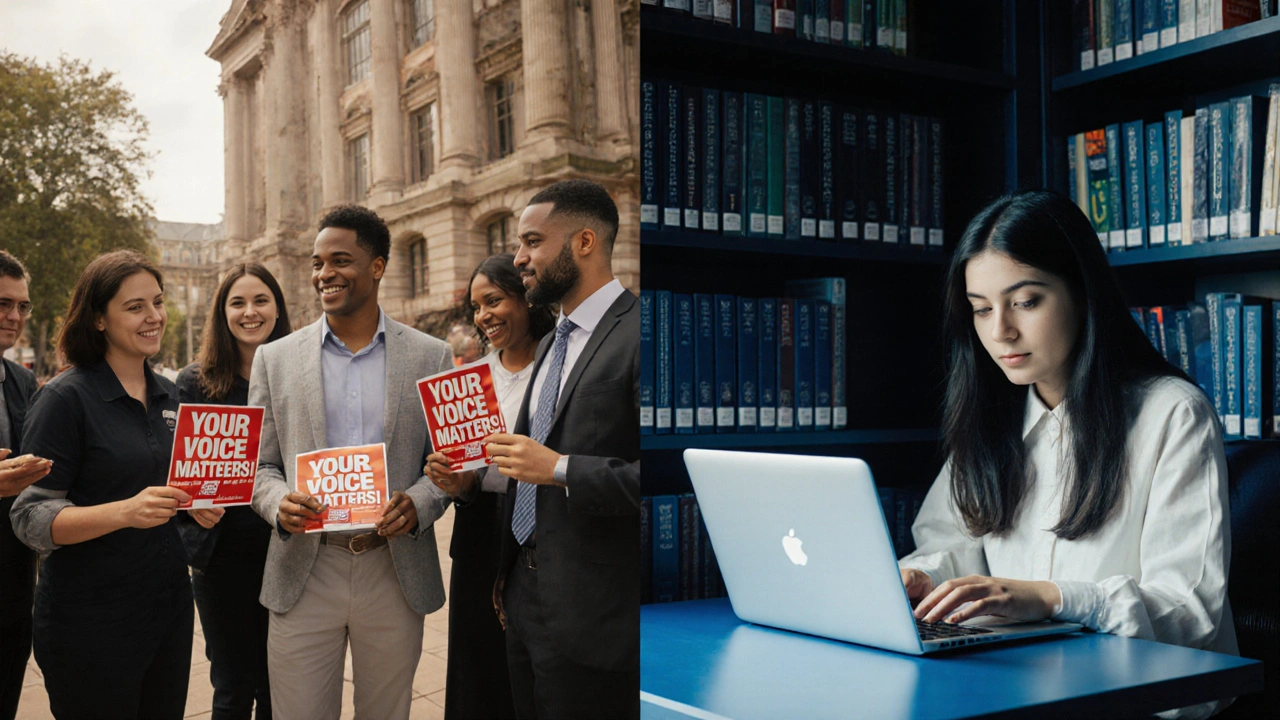

The NSS was created with good intentions - to give students a voice and push universities to improve. But when rankings turn student feedback into a competitive game, it distorts the goal. Universities focus on pleasing survey respondents instead of serving learners. And students end up misled.

There’s a growing movement to reform how rankings work. Some experts suggest weighting NSS less and adding real outcomes. Others want the survey to be mandatory for all student groups, not just final-year undergrads. Until then, treat NSS scores like a weather report - useful, but not the whole forecast.

If you’re choosing a university, look beyond the numbers. Ask who’s being counted. Ask what’s being measured. And ask what happens after graduation. That’s the only way to know if a school is truly right for you.

Are UK university rankings based mostly on student surveys?

Yes, student satisfaction surveys like the NSS make up a large portion - often 20% to 30% - of rankings from The Guardian and The Times & Sunday Times. But other factors like graduate employment, research quality, student-to-staff ratios, and entry requirements also matter. Rankings are a mix, but NSS scores are heavily weighted because they’re easy to collect and compare.

Can universities fake their NSS scores?

They can’t falsify data, but they can influence responses. Some departments run "NSS prep" sessions, offer incentives like free food or extra credit, or gently guide students toward positive answers. This doesn’t break rules, but it skews results. The Office for Students says this is "undesirable," but there’s no penalty for it. That’s why many experts question whether NSS scores reflect true experience.

Why do top universities like Oxford and Cambridge score lower on student satisfaction?

They often have higher academic pressure, heavier workloads, and more competitive environments. Students may feel overwhelmed, even if they’re learning a lot. Meanwhile, universities with lower entry requirements may offer more personalized support and relaxed expectations, leading to higher satisfaction scores. High scores don’t always mean better education - they often mean easier conditions.

Does a high NSS score mean I’ll get a better job after graduation?

Not necessarily. NSS measures how satisfied students felt during their course - not how well they were prepared for work. Graduate Outcomes data shows that some universities with low NSS scores have higher employment rates. For example, a technical course with tough assessments might score poorly on satisfaction but produce graduates who get hired quickly. Look at job outcomes, not just survey scores.

Should I ignore university rankings entirely?

No - but don’t rely on them. Use rankings as a starting point, not a final decision. Check the underlying data: graduate employment rates, dropout rates, student-to-staff ratios, and department-specific reviews. Talk to current students. Visit campuses. Rankings can help narrow options, but real insight comes from digging deeper than the headline number.